Feature engineering

Title feature

It is clear that we can't use directly the "Name" variable. This is due to the fact that names are almost unique, and that leads to a tiny generalization power. To understand that we should see that even if we learned that a passenger with a given name survived or not. We can't decide if another passenger survived, using only the name of the new passenger.

Lets inspect some of the values from "Name" variable.

SolidFrame.wrapOf(train.var("Name")).printLines(20);

Name

[0] "Braund, Mr. Owen Harris"

[1] "Cumings, Mrs. John Bradley (Florence Briggs Thayer)"

[2] "Heikkinen, Miss. Laina"

[3] "Futrelle, Mrs. Jacques Heath (Lily May Peel)"

[4] "Allen, Mr. William Henry"

[5] "Moran, Mr. James"

[6] "McCarthy, Mr. Timothy J"

[7] "Palsson, Master. Gosta Leonard"

[8] "Johnson, Mrs. Oscar W (Elisabeth Vilhelmina Berg)"

[9] "Nasser, Mrs. Nicholas (Adele Achem)"

[10] "Sandstrom, Miss. Marguerite Rut"

[11] "Bonnell, Miss. Elizabeth"

[12] "Saundercock, Mr. William Henry"

[13] "Andersson, Mr. Anders Johan"

[14] "Vestrom, Miss. Hulda Amanda Adolfina"

[15] "Hewlett, Mrs. (Mary D Kingcome) "

[16] "Rice, Master. Eugene"

[17] "Williams, Mr. Charles Eugene"

[18] "Vander Planke, Mrs. Julius (Emelia Maria Vandemoortele)"

[19] "Masselmani, Mrs. Fatima"

We notice that the names contains title information of the individual. This is valuable, but how can we benefit from that? First of all see that the format of that string is clear: space + title + dot + space. We can try to model a regular expression or we can take a simpler, but manual path. Intuition tells us that there should not be too many keys.

We build a set with known keys. After that we filter out names with known titles, and print first twenty of them. We see that we have already "Mrs" and "Mr". Let's find others.

// build incrementally a set with known keys

HashSet<String> keys = new HashSet<>();

keys.add("Mrs");

keys.add("Mr");

// filter out names with known keys

// print first twenty to inspect and see other keys

train.getVar("Name").stream()

.mapToString()

.filter(txt -> {

for(String key : keys)

if(txt.contains(" " + key + ". "))

return false;

return true;

})

.limit(20)

.forEach(WS::println);

"Heikkinen, Miss. Laina"

"Palsson, Master. Gosta Leonard"

"Sandstrom, Miss. Marguerite Rut"

"Bonnell, Miss. Elizabeth"

"Vestrom, Miss. Hulda Amanda Adolfina"

"Rice, Master. Eugene"

"McGowan, Miss. Anna 'Annie'"

"Palsson, Miss. Torborg Danira"

"O'Dwyer, Miss. Ellen 'Nellie'"

"Uruchurtu, Don. Manuel E"

"Glynn, Miss. Mary Agatha"

"Vander Planke, Miss. Augusta Maria"

"Nicola-Yarred, Miss. Jamila"

"Laroche, Miss. Simonne Marie Anne Andree"

"Devaney, Miss. Margaret Delia"

"O'Driscoll, Miss. Bridget"

"Panula, Master. Juha Niilo"

"Rugg, Miss. Emily"

"West, Miss. Constance Mirium"

"Goodwin, Master. William Frederick"

We reduced our search and found other titles like "Miss", "Master". We arrive at the following set of keys:

HashSet<String> keys = new HashSet<>();

keys.addAll(Arrays.asList(

"Mrs", "Mme", "Lady", "Countess", "Mr", "Sir",

"Don", "Ms", "Miss", "Mlle", "Master", "Dr",

"Col", "Major", "Jonkheer", "Capt", "Rev"));

Nominal title = train.var("Name").stream()

.mapToString()

.map(txt -> {

for(String key : keys)

if(txt.contains(" " + key + ". "))

return key;

return "?";

})

.collect(Nominal.collector());

DVector.fromCount(true, title).printSummary();

? Mr Mrs Miss Master Don Rev Dr Mme Ms Major Lady Sir Mlle Col Capt Countess Jonkheer

- -- --- ---- ------ --- --- -- --- -- ----- ---- --- ---- --- ---- -------- --------

0.000 517.000 125.000 182.000 40.000 1.000 6.000 7.000 1.000 1.000 2.000 1.000 1.000 2.000 2.000 1.000 1.000 1.000

We note that we exhausted training data. This is enough. It is possible that in test data to appear new titles. We will consider them missing values. That is why we return "?" when no matching is found. Another thing to notice is that some of the labels have few number of appearances. We will merge them in a greater category.

Another useful feature built in rapaio is filters. There are two types of filters: variable filters and frame filters. The nice part of frame filters is that learning algorithms are able to use frame filters naturally, in order to make feature transformations on data. This kind of filters are called input filters from the learning algorithm perspective. It is important that you know that input filters transforms features before train phase and also on fit phase.

We will build a learning filter to create a new feature.

/**

* Frame filter which adds a title variable based on name variable

*/

class TitleFilter implements FFilter {

private static final long serialVersionUID = -3496753631972757415L;

private HashMap<String, String[]> replaceMap = new HashMap<>();

private Function<String, String> titleFun = txt -> {

for (Map.Entry<String, String[]> e : replaceMap.entrySet()) {

for (int i = 0; i < e.getValue().length; i++) {

if (txt.contains(" " + e.getValue()[i] + ". "))

return e.getKey();

}

}

return "?";

};

@Override

public void fit(Frame df) {

replaceMap.put("Mrs", new String[]{"Mrs", "Mme", "Lady", "Countess"});

replaceMap.put("Mr", new String[]{"Mr", "Sir", "Don", "Ms"});

replaceMap.put("Miss", new String[]{"Miss", "Mlle"});

replaceMap.put("Master", new String[]{"Master"});

replaceMap.put("Dr", new String[]{"Dr"});

replaceMap.put("Military", new String[]{"Col", "Major", "Jonkheer", "Capt"});

replaceMap.put("Rev", new String[]{"Rev"});

}

@Override

public Frame apply(Frame df) {

NominalVar title = NominalVar.empty(0, new ArrayList<>(replaceMap.keySet())).withName("Title");

df.var("Name").stream().mapToString().forEach(name -> title.addLabel(titleFun.apply(name)));

return df.bindVars(title);

}

}

Now let's try a new random forest on the reduced data set and also on title.

RandomSource.setSeed(123);

CForest rf = CForest.newRF()

.withInputFilters(

new TitleFilter(),

new FFMapVars("Survived,Sex,Pclass,Embarked,Title")

)

.withClassifier(CTree.newCART().withMinCount(3))

.withRuns(100);

cv(train, rf);

rf.train(train, "Survived");

rf.printSummary();

CFit fit = rf.fit(test);

new Confusion(train.var("Survived"), rf.fit(train).firstClasses()).printSummary();

new Csv().withQuotes(false).write(SolidFrame.wrapOf(

test.var("PassengerId"),

fit.firstClasses().withName("Survived")

), root + "rf2-submit.csv");

Cross validation 10-fold

CV fold: 1, acc: 0.833333, mean: 0.833333, se: NaN

CV fold: 2, acc: 0.831461, mean: 0.832397, se: 0.001324

CV fold: 3, acc: 0.797753, mean: 0.820849, se: 0.020024

CV fold: 4, acc: 0.820225, mean: 0.820693, se: 0.016352

CV fold: 5, acc: 0.764045, mean: 0.809363, se: 0.029023

CV fold: 6, acc: 0.820225, mean: 0.811174, se: 0.026335

CV fold: 7, acc: 0.887640, mean: 0.822097, se: 0.037593

CV fold: 8, acc: 0.820225, mean: 0.821863, se: 0.034811

CV fold: 9, acc: 0.820225, mean: 0.821681, se: 0.032567

CV fold:10, acc: 0.831461, mean: 0.822659, se: 0.030860

=================

mean: 0.822659, se: 0.030860

> Confusion

Ac\Pr | 0 1 | total

----- | - - | -----

0 | >520 29 | 549

1 | 123 >219 | 342

----- | - - | -----

total | 643 248 | 891

Complete cases 891 from 891

Acc: 0.8294052 (Accuracy )

F1: 0.8724832 (F1 score / F-measure)

MCC: 0.6375234 (Matthew correlation coefficient)

Pre: 0.8087092 (Precision)

Rec: 0.9471767 (Recall)

G: 0.8752088 (G-measure)

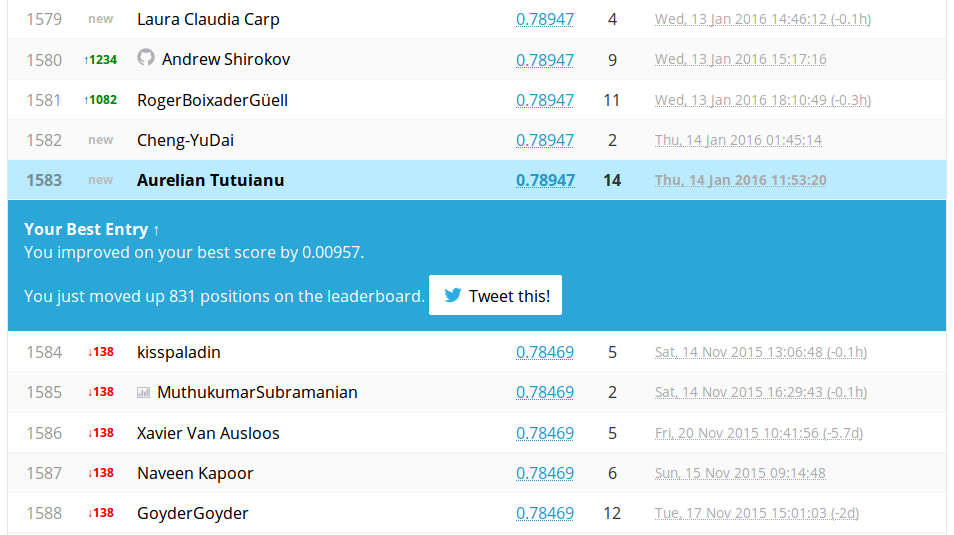

Now that looks definitely better than our best classifier. We submit that to kaggle to see the improvement.

Other features

There are various authors which published their work on solving this kaggle competition. Most interesting part of their work is the feature engineering section. I developed here some ideas in order to show how one can do this with the library.

Family size

Using directly "SibSp" and "Parch" fields yields no value for a random forest classifier. Studying this two features it looks like those values can be both combined into a single one by summation. This would give us a family size estimator.

In order to have an idea of the performance of this new estimator I used a ChiSquare independence test. The idea is to study if those features taken separately worth less than combined.

// convert sibsp and parch to nominal types to be able to use a chi-square test

NominalVar sibsp = NominalVar.from(train.getRowCount(), row -> train.getLabel(row, "SibSp"));

NominalVar parch = NominalVar.from(train.getRowCount(), row -> train.getLabel(row, "Parch"));

// test individually each feature

ChiSquareTest.independence(train.getVar("Survived"), sibsp).printSummary();

ChiSquareTest.independence(train.getVar("Survived"), parch).printSummary();

// build a combined feature by summation, as nominal

Nominal familySize = Nominal.from(train.rowCount(),

row -> "" + (1 + train.index(row, "SibSp") + train.index(row, "Parch")));

// run the chi-square test on sumation

ChiSquareTest.independence(train.var("Survived"), familySize).printSummary();

> ChiSquareTest.independence

Pearson’s Chi-squared test

data:

1.0 0.0 3.0 4.0 2.0 5.0 8.0 total

0 97.000 398.000 12.000 15.000 15.000 5.000 7.000 549.000

1 112.000 210.000 4.000 3.000 13.000 0.000 0.000 342.000

total 209.000 608.000 16.000 18.000 28.000 5.000 7.000 891.000

X-squared = 37.2717929, df = 6, p-value = 1.5585810465568173E-6

> ChiSquareTest.independence

Pearson’s Chi-squared test

data:

0.0 1.0 2.0 5.0 3.0 4.0 6.0 total

0 445.000 53.000 40.000 4.000 2.000 4.000 1.000 549.000

1 233.000 65.000 40.000 1.000 3.000 0.000 0.000 342.000

total 678.000 118.000 80.000 5.000 5.000 4.000 1.000 891.000

X-squared = 27.9257841, df = 6, p-value = 9.703526421045439E-5

> ChiSquareTest.independence

Pearson’s Chi-squared test

data:

2 1 5 3 7 6 4 8 11 total

0 72.000 374.000 12.000 43.000 8.000 19.000 8.000 6.000 7.000 549.000

1 89.000 163.000 3.000 59.000 4.000 3.000 21.000 0.000 0.000 342.000

total 161.000 537.000 15.000 102.000 12.000 22.000 29.000 6.000 7.000 891.000

X-squared = 80.6723134, df = 8, p-value = 3.574918139293004E-14

How we can interpret the result? The test says that each feature brings value separately. The p-value associated with both test could be considered significant. That means there are string evidence that those features are not independent of target class. As a conclusion, those features are useful. The last test is made for their summation. It looks like he test is more significant than the previous two. As a consequence we can use the summation instead of those two values taken independently.

Cabin and Ticket

It seems that cabin and ticket denominations are not useful as they are. There are some various reasons why not to do so. First of all they have many missing values. But a stringer reason is that both have too many levels to contain solid generalization base for learning.

If we take only the first letter from each of those two fields, more generalization can happen. This is probably due to the fact that perhaps there is some localization information encoded in those. Perhaps information about the deck, the comfort level, auxiliary functions is encoded in there. As a conclusion it worth a try so we proceed with this thing.

To combine all those things we can do it in a single filter or into many filters applied on data. I chose to do a single custom filter to solve all those problems.

class CustomFilter implements FFilter {

private static final long serialVersionUID = -3496753631972757415L;

private HashMap<String, String[]> replaceMap = new HashMap<>();

private Function<String, String> titleFun = txt -> {

for (Map.Entry<String, String[]> e : replaceMap.entrySet()) {

for (int i = 0; i < e.getValue().length; i++) {

if (txt.contains(" " + e.getValue()[i] + ". "))

return e.getKey();

}

}

return "?";

};

@Override

public void fit(Frame df) {

replaceMap.put("Mrs", new String[]{"Mrs", "Mme", "Lady", "Countess"});

replaceMap.put("Mr", new String[]{"Mr", "Sir", "Don", "Ms"});

replaceMap.put("Miss", new String[]{"Miss", "Mlle"});

replaceMap.put("Master", new String[]{"Master"});

replaceMap.put("Dr", new String[]{"Dr"});

replaceMap.put("Military", new String[]{"Col", "Major", "Jonkheer", "Capt"});

replaceMap.put("Rev", new String[]{"Rev"});

}

@Override

public Frame apply(Frame df) {

NominalVar title = NominalVar.empty(0, new ArrayList<>(replaceMap.keySet())).withName("Title");

df.getVar("Name").stream().mapToString().forEach(name -> title.addLabel(titleFun.apply(name)));

Var famSize = NumericVar.from(df.rowCount(), row ->

1.0 + df.getIndex(row, "SibSp") + df.getIndex(row, "Parch")

).withName("FamilySize");

Var ticket = NominalVar.from(df.rowCount(), row ->

df.isMissing(row, "Ticket") ? "?" : df.getLabel(row, "Ticket")

.substring(0, 1).toUpperCase()

).withName("Ticket");

Var cabin = NominalVar.from(df.rowCount(), row ->

df.isMissing(row, "Cabin") ? "?" : (df.getLabel(row, "Cabin")

.substring(0, 1).toUpperCase())

).withName("Cabin");

return df.removeVars("Ticket,Cabin").bindVars(famSize, ticket, cabin, title).solidCopy();

}

}

Another try with random forests

So we have some new features and we look to learn from them. We can use a previous classifier like random forests to test it before submit.

But we know that we are in danger to overfit is rf. One idea is to transform numeric features into nominal ones by a process named discretization. For this purpose we use a filter from the library called FFQuantileDiscrete. This filter computes a given number of quantile intervals and put labels according with those intervals on numerical values. Let's see how we proceed and how the data looks like:

FFilter[] inputFilters = new FFilter[]{

new CustomFilter(),

new FFQuantileDiscrete(10, "Age"),

new FFQuantileDiscrete(10, "Fare"),

new FFQuantileDiscrete(3, "SibSp"),

new FFQuantileDiscrete(3, "Parch"),

new FFQuantileDiscrete(8, "FamilySize"),

new FFMapVars("Survived,Sex,Pclass,Embarked,Title,Age,Fare,FamilySize,Ticket,Cabin")

};

// print a summary of the transformed data

train.applyFilters(inputFilters).printSummary();

> printSummary(frame, [Survived, Sex, Pclass, Embarked, Title, Age, Fare, FamilySize,

Ticket, Cabin])

rowCount: 891

complete: 183/891

varCount: 10

varNames:

0. Survived : NOMINAL | 4. Title : NOMINAL | 8. Ticket : NOMINAL |

1. Sex : NOMINAL | 5. Age : NOMINAL | 9. Cabin : NOMINAL |

2. Pclass : NUMERIC | 6. Fare : NOMINAL |

3. Embarked : NOMINAL | 7. FamilySize : NOMINAL |

Survived Sex Pclass Embarked Title Age

0 : 549 male : 577 Min. : 1.000 S : 644 Mr : 520 31.8~36 : 91

1 : 342 female : 314 1st Qu. : 2.000 C : 168 Miss : 184 14~19 : 87

Median : 3.000 Q : 77 Mrs : 128 41~50 : 78

Mean : 2.309 NA's : 2 Master : 40 -Inf~14 : 77

2nd Qu. : 3.000 Dr : 7 22~25 : 70

Max. : 3.000 Rev : 6 (Other) : 244

(Other) : 6 NA's : 177

Fare FamilySize Ticket Cabin

7.854~8.05 : 106 -Inf~1 : 537 3 : 301 C : 59

-Inf~7.55 : 92 1~2 : 161 2 : 183 B : 47

27~39.688 : 91 2~3 : 102 1 : 146 D : 33

21.679~27 : 89 3~Inf : 91 P : 65 E : 32

39.688~77.958 : 89 S : 65 A : 15

14.454~21.679 : 88 C : 47 (Other) : 5

(Other) : 336 (Other) : 84 NA's : 687

We can see that "Age" values are now intervals and still missing values.

The values chosen for quantile numbers is more or less arbitrary. There is no good numbers in general, only for some specific purposes.

As promised, we will give a try to another random forest to see if it can better generalize.

RandomSource.setSeed(123);

CForest model = CForest.newRF()

.withInputFilters(inputFilters)

.withMCols(4)

.withBootstrap(0.7)

.withClassifier(CTree.newCART()

.withFunction(CTreePurityFunction.GainRatio).withMinGain(0.001))

.withRuns(200);

model.train(train, "Survived");

CFit fit = model.fit(test);

new Confusion(train.getVar("Survived"), model.fit(train).firstClasses()).printSummary();

cv(train, model);

I tried some ideas to make the forest to generalize better.

- Smaller bootstrap percentage - this could lead to increased independence of trees

- Use

GainRatioas purity function because sometimes is more conservative - Use

MinGainto avoid growing trees to have many leaves with a single instance - Use

mCols=4, number of variables used for testing - more than default value, to improve the quality of each tree

> Confusion

Ac\Pr | 0 1 | total

----- | - - | -----

0 | >532 17 | 549

1 | 33 >309 | 342

----- | - - | -----

total | 565 326 | 891

Complete cases 891 from 891

Acc: 0.9438833 (Accuracy )

F1: 0.9551167 (F1 score / F-measure)

MCC: 0.880954 (Matthew correlation coefficient)

Pre: 0.9415929 (Precision)

Rec: 0.9690346 (Recall)

G: 0.9552152 (G-measure)

Cross validation 10-fold

CV fold: 1, acc: 0.833333, mean: 0.833333, se: NaN

CV fold: 2, acc: 0.786517, mean: 0.809925, se: 0.033104

CV fold: 3, acc: 0.842697, mean: 0.820849, se: 0.030099

CV fold: 4, acc: 0.898876, mean: 0.840356, se: 0.046109

CV fold: 5, acc: 0.831461, mean: 0.838577, se: 0.040129

CV fold: 6, acc: 0.842697, mean: 0.839263, se: 0.035932

CV fold: 7, acc: 0.853933, mean: 0.841359, se: 0.033267

CV fold: 8, acc: 0.764045, mean: 0.831695, se: 0.041179

CV fold: 9, acc: 0.842697, mean: 0.832917, se: 0.038694

CV fold:10, acc: 0.842697, mean: 0.833895, se: 0.036612

=================

mean: 0.833895, se: 0.036612

These are the results. At a first look might seem like an astonishing result. But we know that the irreducible error for this data set is high and is close to . It seems obvious that we failed to reduce the variance and we still overfit a lot using this construct. Since this is a tutorial I will not insist on improving this model, but I think that even if it would be improved, the gain would be very small. Perhaps another approach would be better.